Gitaly at Scalingo: Explaining the complete redesign of how we host your application git repositories

At Scalingo, we recently tackled a complete redesign of how we host your application git repositories.

We wanted to share with you the reason behind this redesign (bye bye NFS), the choice we made (good morning Gitaly), and how we implemented it.

Let's get our hands dirty and dive into Scalingo internal infrastructure!

*Written by Etienne Michon, R&D Engineer at Scalingo *

Reminder : Different Ways To Deploy Code on Scalingo

Our PaaS Scalingo offers multiple ways to deploy an application:

- Using a source code management integration. Link your application to its repository on services such as GitHub or GitLab, and deploy it automatically when new code is pushed on this repository. This option is currently used by more than 40% of our customers and this number keeps growing. It's a safe option to make sure a Scalingo application is always in sync with the source code.

- Using our Git integration. Add a specific Git remote to your local repository, and deploy your application with a simple command:

git push scalingo master. This option is used by the remaining 60% of Scalingo customers. - Using our API. Send a compressed archive of your source code to the API to trigger a deployment of your application. This option is used by such a small share of customers that we can't even put a figure on it.

But before jumping in let's start by giving a bit of context about what led us to consider new option.

Why Did We Had To Turn To Gitaly for Scalingo?

Handling Scalingo Growth

With the continuous growth of Scalingo we wanted to devise an architecture more resilient to failures, and easier to maintain.

The current software stack was starting to get really old and we were facing many challenges to maintain it.

Git Repository Corruption Here and There

Some customers faced git repository corruptions when using Scalingo Git integration and met this kind of weird error message:

fatal: '/mnt/git-mount/my-app.mount' does not appear to be a git repository

Even though this problem was rare, it led to support requests and a manual intervention for an operator.

The Scalingo Legacy Git Infrastructure

The problem comes from the current Git architecture we have. In a simplistic view, here is how it looks like:

The source code of all applications hosted on Scalingo is actually on a NFS shared folder. This folder is shared among all the builders.

This architecture may lead to various problems. For example, a problem may arise if multiple deployments are triggered on an application in a short time interval. Under some race condition, we may unmount the NFS folder in the middle of the second deployment.

But NFS was also known at Scalingo to imply other issues:

- Performance issues

- Network latency for file access

- Process reading the files may end up in an uninterruptible sleep

- Etc

To make a long story short: NFS was used in various places in Scalingo infancy. We are slowly moving away from it everywhere possible. And Git repositories hosting is one of the last bastions using NFS at Scalingo.

Good Bye NFS

We feel like now is a good time for Scalingo to move away from NFS to another architecture for Git repositories. We started to investigate on what other source code hosting companies use.

And from what we read, we are not alone trying to find a good solution for repositories hosting. Especially, the most well known source code hosting company communicated about this:

- GitHub announced the creation of a home made solution named Spokes to get rid of NFS.

- GitLab released the open source software Gitaly to manage their repositories.

We also explored other options such as:

- Updating the current software to be more up-to-date and easier to maintain, and get rid of NFS by using an object storage for the storage.

- Using Git Ketch which used to be in use at Google.

- Using Stemma to manage the Git repositories and implement our own logic for automatic failover.

The Best Solution Out There

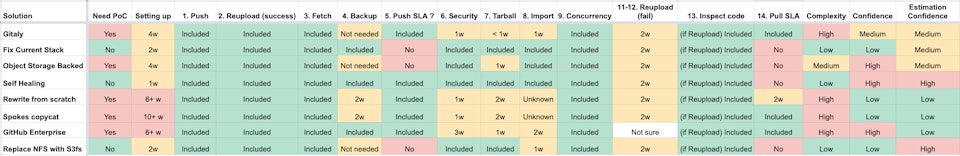

We further investigated, and compared various criteria that you can discover in the image below.

These criteria range from all the Git features we need (push, reupload and fetch) to more fuzzy criteria such as the perceived complexity of a solution or our perceived confidence in the solution.

Out of those criteria we always give our best to understand the time that is necessary to implement the solution.

You can also see the full image here.

We eventually settled on Gitaly:

- They developed it to get rid of NFS, just like us \o/

- Horizontally scalable: one can easily add more storage capacity by adding new nodes to the cluster.

- Prevents write errors.

- Highly available: with NFS, a server outage leads to a downtime for all the deployments on the platform. In the Gitaly world, the server is more resilient to failure.

- The Gitaly project is open source with a license allowing us to use it.

How Does Gitaly Works

Gitaly achieves all the advantages outlined earlier thanks to a couple of components: Praefect and Gitaly.

Gitaly is a daemon which runs on all nodes intended to store the Git repositories data (no more NFS!). It offers a high level Git API with gRPC. This high level API is also interesting so that our operators do not need to understand too much of Git internal working. They would be able to work with some high level API calls.

Praefect is intended at distributing the incoming connections to the Gitaly nodes. It detects a Gitaly node failure to be able to dispatch the connections to healthy nodes. It also ensures a repository is replicated on all Gitaly nodes. This is the component responsible of the high availability. It is really important for Scalingo to ensure all components are highly available!

Gitaly At Scalingo

And here is the final architecture we settled on to integrate Gitaly on our infrastructure. The following is a simplistic view of our infrastructure:

As seen on this figure, the new architecture uses Praefect and Gitaly for the Git repositories storage. It also includes a new software developed internally called Git Repository Core.

This software acts as a router: it receives incoming SSH connection coming from the git push scalingo master command issued by a customer, and direct it either to the NFS repository storage or to the Gitaly storage. This was a strong requisite for us so that we could release this feature using the canary release pattern: release Gitaly backend for a small share of our customers and increase the share of customers using it over time.

It is also in charge of triggering the deployment of the code hosted on Gitaly.

With it's central place, Git Repository Core allows us to intercept the Git traffic, and potentially filter it. We plan to add more security features, but also convenient ones for people deploying their application using this Git integration. For instance, adding the ability to customize the main branch name (e.g. main rather than the default master branch).

On a more internal perspective, Git Repository Core allows us to develop administrative endpoint to ease the work from the operator in their day-to-day work.

All elements on the figure are duplicated to improve the resiliency to server failures.

Conclusion

We are really happy to announce that the Gitaly infrastructure is already deployed in production.

This new architecture will improve the availability of the deployments on Scalingo, and improve our ability to offer new features in the future for deployments using our Git integration.

We hope you enjoyed this article and feel free if you have any question to contact us on our support.

A small share of our customers currently uses this new architecture and we are slowly migrating more and more customers on it with no problem so far. We plan for 50% of the applications to use it by the end of April, and 100% by the end of the summer!

*Written by Etienne Michon, R&D Engineer at Scalingo *

Photo by Pankaj Patel on Unsplash