HTTP/2 and New Routing Features

Two months ago, we announced the release of our new future-proof HTTP router based on Openresty. Since then, we've capitalized on it, and a bunch of new features have been made available. Among them HTTP/2 compatibility.

Before going on with this blog post, you may want to read some details about our new OpenResty based HTTP router.

HTTP/2

Browsers and web applications are usually talking HTTP with each other. In May 2015, the second version of this protocol has been published, as a major revision of the HTTP network protocol. This new release keeps the same principles as the previous version: methods, headers and statuses. But it introduces multiple new features on the transport side including:

- Being a binary protocol

- Compressed headers

- Requests multiplexing

These features improve the amount of connections required by the clients to make requests and the amount of data sent/received to/from the application. In cases where latency is high (such as mobile connections), it could increase the performance greatly. With only one connection, HTTP/2 implies only a single TCP three-way handshake, and one TLS negociation.

There are a lot of other neat stuff in HTTP/2, you'll find more details in the specifications RFCs listed here.

How can I enable it for my app?

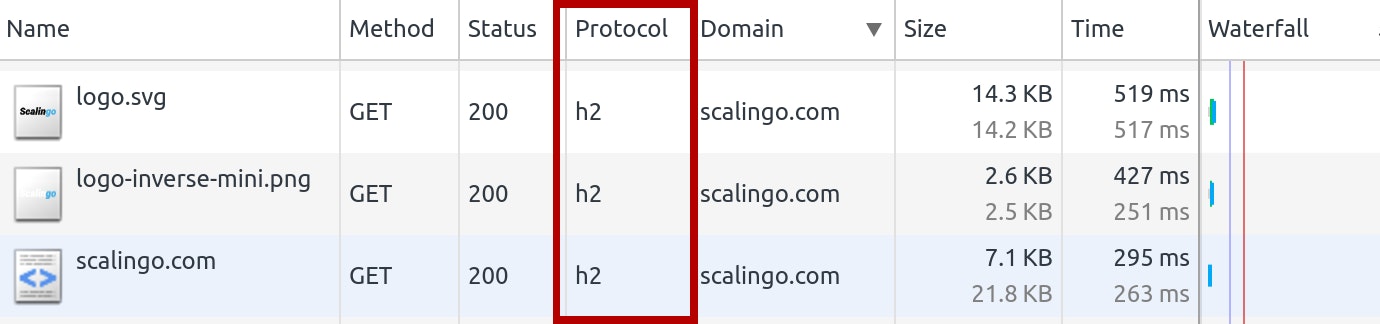

Actually, if you are using a modern browser, you are probably using it already. If the client is capable of communicating with HTTP/2, our proxies will automatically upgrade the connection to HTTP/2.

Request ID Header

Another useful feature we added thanks to the deployment of our new HTTP router

is the request ID header. Our routing servers are the first entities to receive

your HTTP requests, they are now adding the header X-Request-ID to each

received request before sending it to your application containers.

This is a unique identifier you can use in your log to identify a precise request without having to study timestamps/source IP addresses. A simple usage is to help you read logs when requests are being executed simultaneously, it's much more easy to de-interlace logs and get which line goes with which request.

2017-05-31 18:42:30 [web-1] [7c129eb1-c479-47bb-9c73-d263e2673026] Started GET "/v1/apps/sample-go-martini/containers" for 62.99.220.106 at 2017-05-31 16:42:30 +0000

2017-05-31 18:42:30 [web-1] [7c129eb1-c479-47bb-9c73-d263e2673026] Processing by App::ContainersController#index as JSON

2017-05-31 18:42:30 [web-1] [7c129eb1-c479-47bb-9c73-d263e2673026] Parameters: {"app_id"=>"sample-go-martini"}

2017-05-31 18:42:30 [web-1] [7c129eb1-c479-47bb-9c73-d263e2673026] Completed 200 OK in 8ms (Views: 1.1ms)

2017-05-31 18:42:30 [web-2] [7742c954-7534-4e76-8828-9e548908958d] Started GET "/v1/apps/sample-go-martini/containers" for 62.99.220.106 at 2017-05-31 16:42:30 +0000

2017-05-31 18:42:30 [web-2] [7742c954-7534-4e76-8828-9e548908958d] Processing by App::ContainersController#index as JSON

2017-05-31 18:42:30 [web-2] [7742c954-7534-4e76-8828-9e548908958d] Parameters: {"app_id"=>"sample-go-martini"}

2017-05-31 18:42:30 [web-1] [9caa8d15-7851-41b0-91c4-512b34f20ea4] Started GET "/v1/apps/sample-go-martini/containers" for 62.99.220.106 at 2017-05-31 16:42:30 +0000

2017-05-31 18:42:30 [web-1] [9caa8d15-7851-41b0-91c4-512b34f20ea4] Processing by App::ContainersController#index as JSON

2017-05-31 18:42:30 [web-1] [9caa8d15-7851-41b0-91c4-512b34f20ea4] Parameters: {"app_id"=>"sample-go-martini"}

2017-05-31 18:42:30 [web-2] [7742c954-7534-4e76-8828-9e548908958d] Completed 200 OK in 7ms (Views: 1.1ms)

2017-05-31 18:42:30 [web-1] [9caa8d15-7851-41b0-91c4-512b34f20ea4] Completed 200 OK in 7ms (Views: 0.9ms)

If your application is communicating with another application, it is advised to

forward the X-Request-ID header to these requests also in order to be able to

trace the complete execution flow of one given request.

You can find examples on how to handle this header with Ruby on Rails and NodeJS in the X-Request-Id documentation page.

Requests Queueing

The next feature we added is more a security feature against DDoS attack. When requests are sent to an application, they are directly forwarded to one of your application's containers. If, for any reason, the application is slower to respond than new incoming requests are reaching the infrastructure, requests will start queueing at our routing server level, waiting for the application containers to handle them.

You can have up to 50 requests queued simultaneously per web container, then

requests will be dropped and an error message will be returned to the client.

For instance, if an application has 2 web containers, up to 100 requests can

be handled simultaneously. If 100 requests are already in the pipe, the 101st

request will be refused and the client of this request will get a 503 Service

Unavailable HTTP response.

Quarantine

The last released feature will improve your application availability in case of

a container crash. So far when a container was crashing or was temporarily

unavailable, requests kept being routed to this unhealthy container, leading to

multiple 502 Bad Gateway response for the user of the concerned applications.

This behavior was not optimal and we wanted to fix that.

From now on, when a container is unavailable, it is instantly detected and it is put to a quarantined pool until its recovery (restart, new deployment, or just a temporary failure resolution). When the container is in quarantine, requests are not sent to it and are routed to the other healthy containers if the application has more than one container.

Healthcheck with exponential backoff are sent to the quarantined containers, if the response is positive, it is added back to the application container pool and requests start flowing again.

Conclusion

Multiple improvements have been done in the background, our next article will focus on features configurable by application like sticky session, automatic redirection or forcing HTTPS to access an application. Those functionalities will appear soon on your dashboard, stay tuned!